Your AI agent is making financial decisions. Your LLM is processing healthcare data. Your zero-knowledge proof is securing millions in a blockchain bridge. When customers or compliance officers ask, "How do we know this is running securely?" — what do you tell them?

"Trust me bro" isn't good enough anymore.

Traditional cloud platforms ask you to trust the provider. Security audits are expensive, they're outdated the moment your code changes, and they don't verify what's actually running in production right now. Compliance officers want proof, not promises.

That's why we built the Trust Center — the first and only automated verification platform that gives you public, cryptographic proof that your applications are running exactly as coded, on genuine security hardware, with zero hidden modifications.

While hardware attestation reports come directly from Intel TDX and Nvidia Confidential Computing processors, only applications built on dstack — Phala's confidential deployment stack — generate the comprehensive evidence needed for Trust Center verification. Dstack captures everything: from hardware boot measurements to application code hashes to encrypted key management, creating an unbroken chain of trust that the Trust Center can verify end-to-end.

What is the Trust Center?

Think of the Trust Center as a "public security certificate" that automatically updates with every deployment. It's a shareable URL that proves four critical things about your application:

- Hardware Proof: Your app runs on genuine Intel TDX and Nvidia Confidential Computing hardware — not simulated environments or compromised systems

- Code Proof: The exact Docker configuration deployed matches your source code — no hidden modifications possible

- KMS Proof: Encryption keys for data and memory are derived from hardware-protected Key Management Service running in TEE — keys never exist outside secure enclaves

- Network Proof: For gateway deployments, TLS certificates are managed entirely within the TEE — zero-trust HTTPS that even Phala can't intercept

Every application deployed to Phala Cloud automatically receives a Trust Center verification report. Deploy your app, get your proof.

The report is public by design. You can share it with customers, auditors, or regulators. Anyone can verify your deployment integrity without needing cryptography expertise or access to your infrastructure.

Three Game-Changing Use Cases

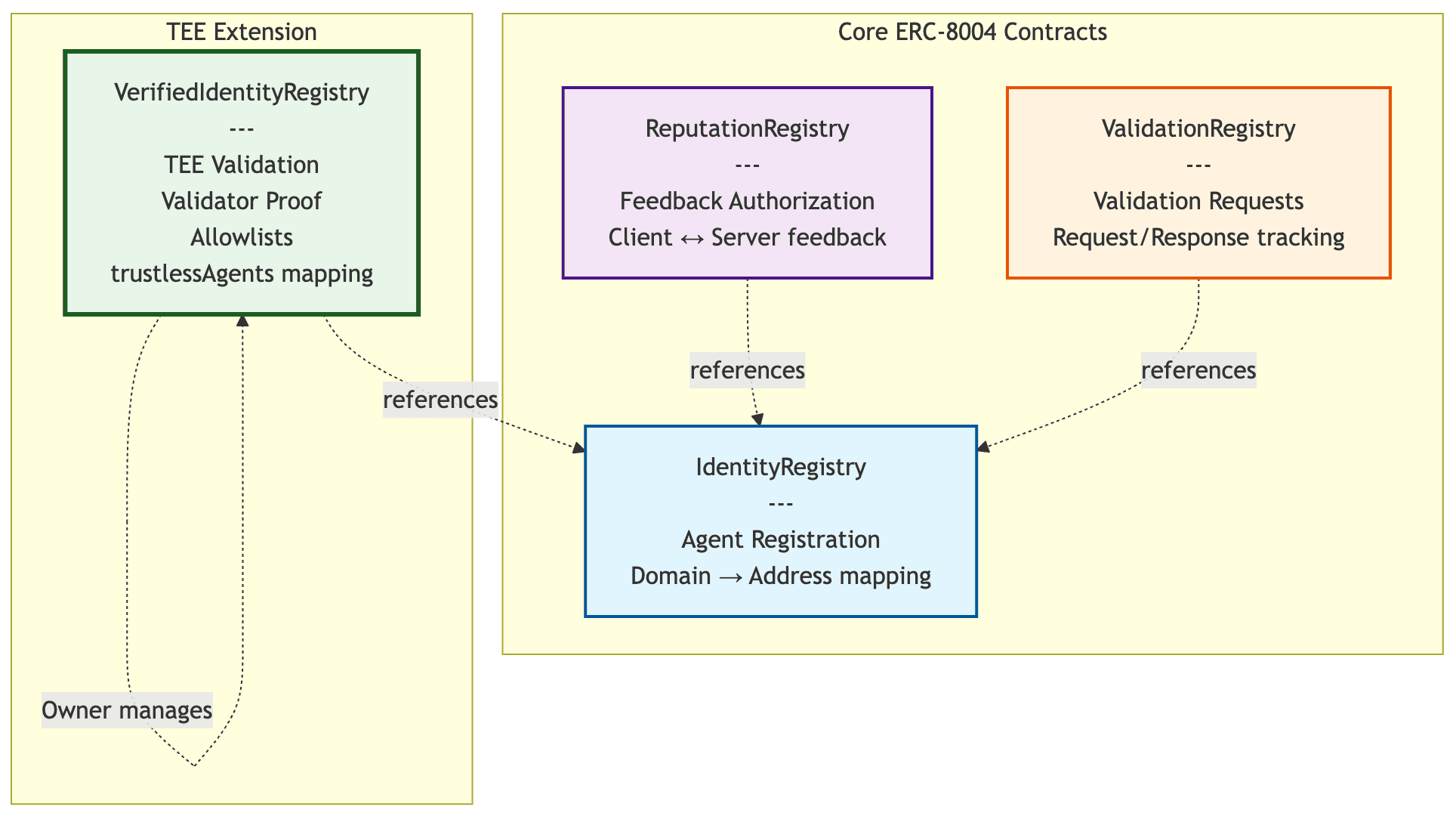

1. Verifiable AI Agents (ERC-8004 Compliance)

AI agents are becoming autonomous economic actors — they execute trades, manage wallets, sign transactions, and make financial decisions. But how do you prove to users that your agent is safe?

The Ethereum community is addressing this with ERC-8004 — an emerging standard for verifiable agents. The standard recognizes that users need to verify agent integrity before granting permissions. "Trust the developer" isn't enough when agents have access to your funds.

The Problem:

- Is the agent running the model you claim, or a cheaper alternative?

- Is it leaking conversation data to the operator?

- Has it been modified with hidden instructions you didn't authorize?

Traditional deployment gives you no way to answer these questions. The code running in production is invisible to users.

Trust Center Solution:

The Trust Center proves exactly what's running:

- Code Verification: Shows that the deployed agent code matches your GitHub repository character-for-character

- Hardware Verification: Proves the agent runs in genuine Intel TDX hardware with memory isolation

- Public Report: A shareable URL you can include in your agent's documentation or smart contract metadata

Real Impact:

For developers building ERC-8004 compliant agents, the Trust Center provides the verifiable execution layer the standard requires. Users can check your Trust Center report before authorizing the agent. You're not asking them to trust you — you're giving them cryptographic proof.

This is a competitive advantage. When users compare agents, yours can say: "Verifiably secure with public proof" while competitors can only say "trust us."

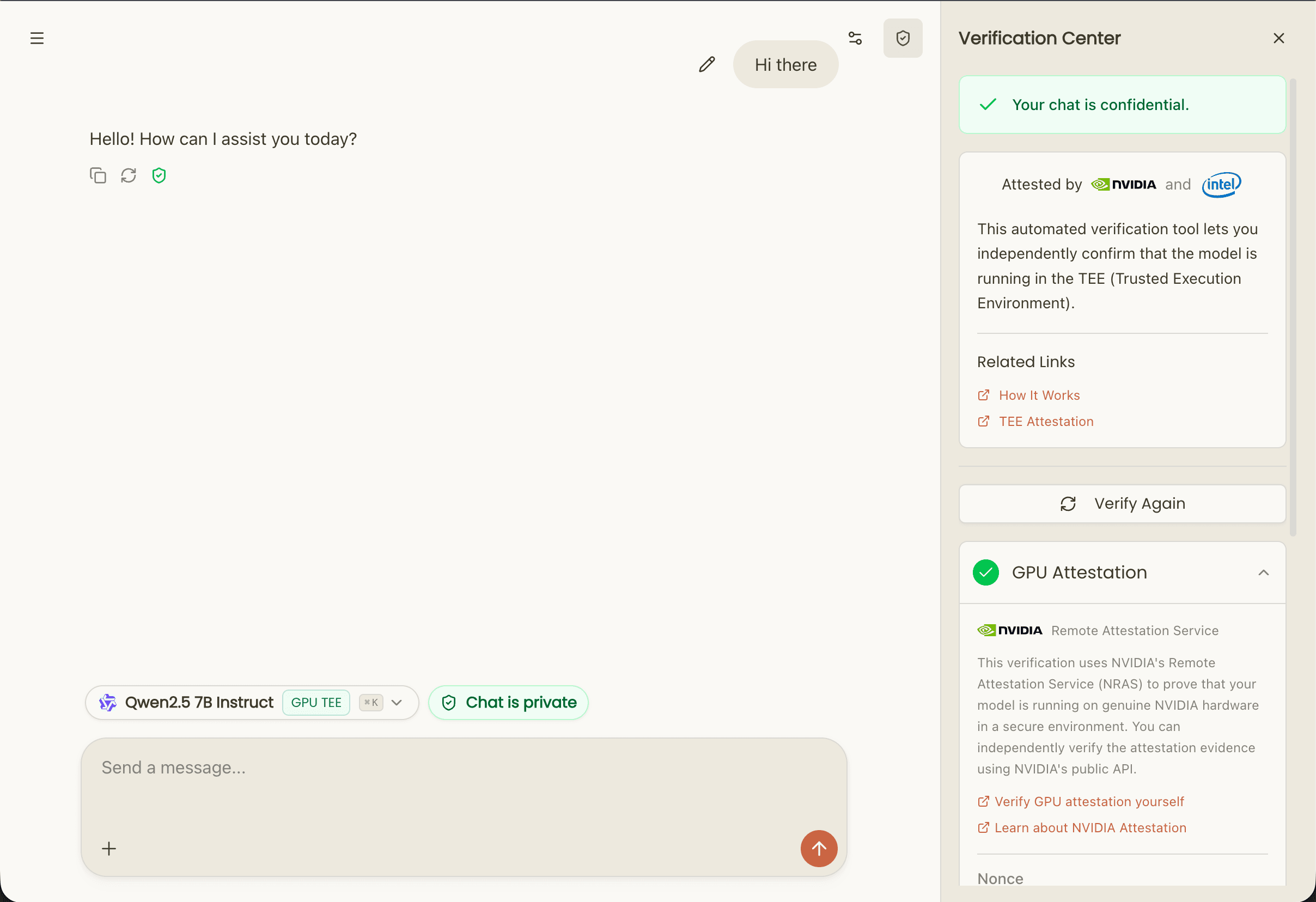

2. Verifiable LLM Inference on TEE GPUs

You claim your API uses GPT-4. But how does the customer know you're not actually running GPT-3.5 to cut costs? Model pricing varies 10-100x across tiers. The incentive to swap models is massive, especially when no one can verify what's actually running.

More critically: who can see your users' chat history? Every prompt, every conversation, every piece of sensitive data flowing through your LLM API. In traditional cloud deployments, the infrastructure provider can log, analyze, or even sell this data. Your customers are trusting you AND your cloud provider with their most sensitive information.

For healthcare, legal, and financial applications, this isn't just about trust — it's about compliance. HIPAA and GDPR require verifiable data isolation. "We use secure infrastructure" doesn't satisfy auditors when they ask "who can access patient conversations?"

Our Infrastructure:

Phala runs large language models on TEE GPUs — specifically Nvidia H200 GPUs with Confidential Computing enabled. This means:

- Chat history is completely private: User prompts and model responses are encrypted in GPU memory

- Model weights are encrypted in GPU memory

- Inference computations are hardware-isolated from the host system

- Even the infrastructure provider (us) cannot access, log, or view your users' conversations

- KMS-protected keys ensure conversation data is encrypted at rest and in transit within the TEE

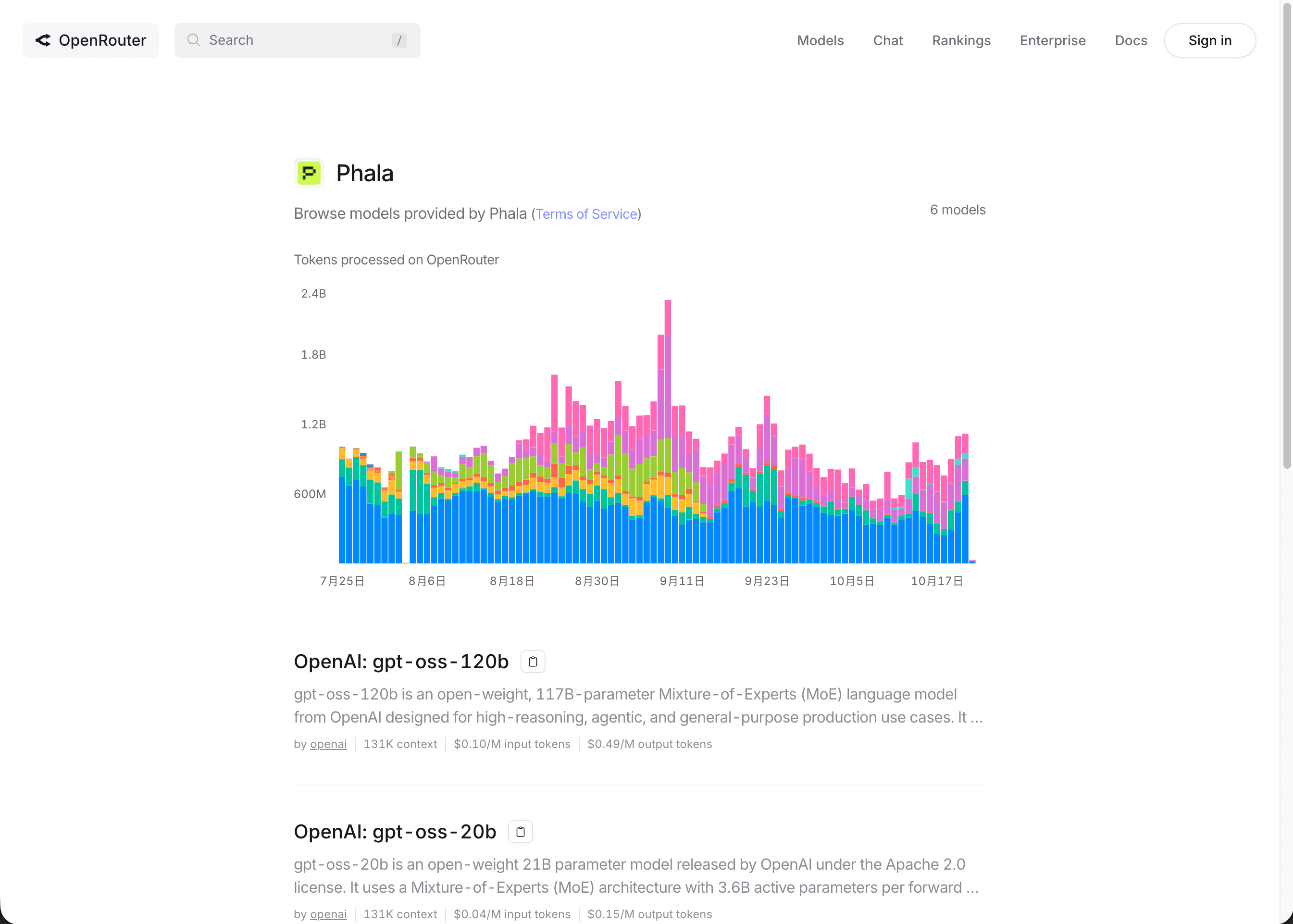

We serve these models through OpenRouter — a leading LLM API aggregator that routes requests to the best model for each use case.

Trust Center Verification:

Every LLM inference request is backed by verifiable TEE execution:

- GPU Attestation: Cryptographic proof from Nvidia that inference runs on genuine Confidential Computing hardware

- Model Container Verification: The exact Docker image containing the LLM is verified against the deployed hash

- Continuous Proof: The Trust Center report updates with every deployment, so verification is always current

Real Impact:

- Guaranteed Chat Privacy: Cryptographic proof that users' conversation history is protected even from infrastructure providers — no logging, no data mining, no surveillance

- Justify Premium Pricing: Show customers cryptographic proof they're getting the premium model they're paying for

- Regulatory Compliance: HIPAA and GDPR auditors get verifiable evidence of data isolation and conversation privacy

- Competitive Positioning: We're the first and only verifiable LLM API in production with provable chat history protection

When a healthcare provider asks "how do I know patient conversations are private?" — you don't say "trust us." You share your Trust Center report showing TEE-based isolation and KMS-protected encryption keys.

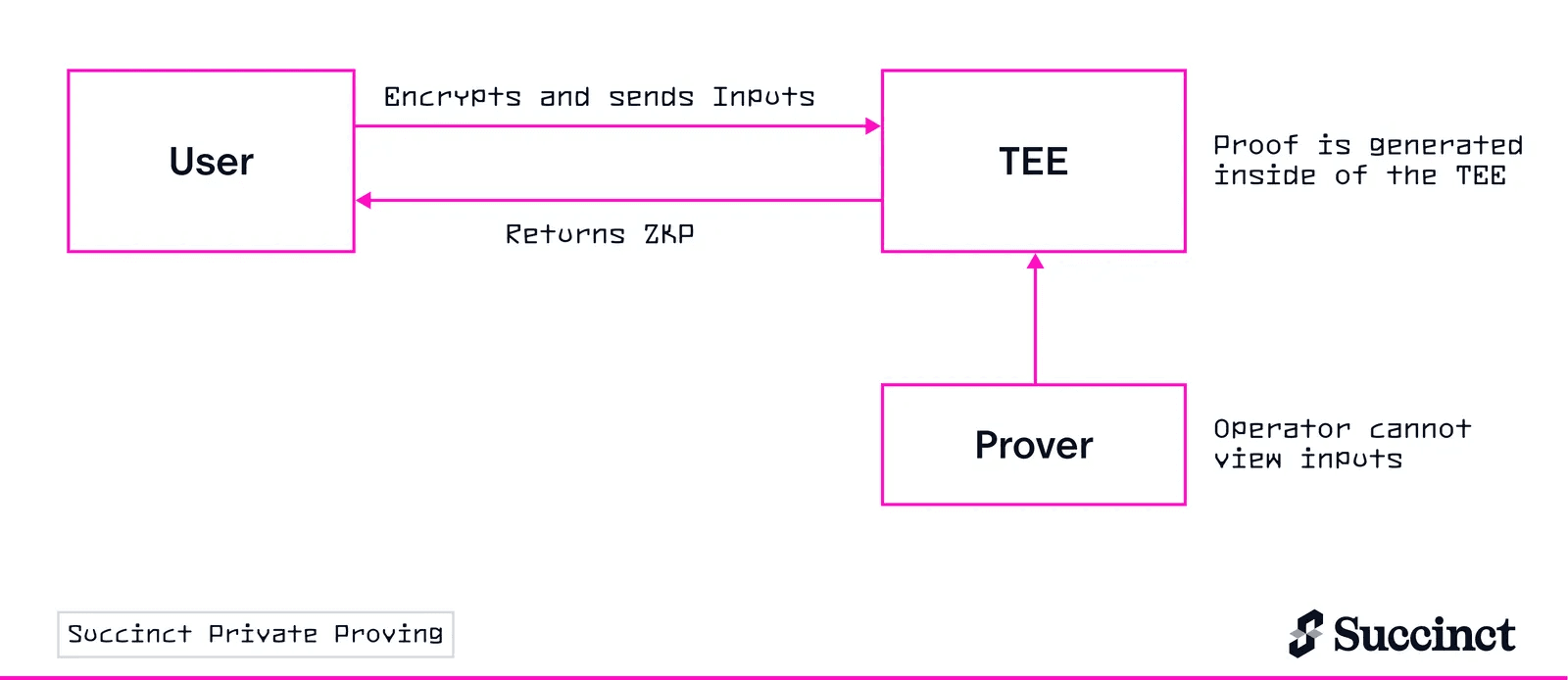

3. Verifiable Zero-Knowledge Proof Generation

Zero-knowledge proofs are cryptographic marvels — they let you prove a computation was performed correctly without revealing the inputs. This powers privacy-preserving rollups, confidential DeFi, and secure blockchain bridges.

But there's a hidden problem: ZK proofs hide data from the verifier, not from the prover.

During proof generation, your private witness data — transaction amounts, account balances, trading positions — must be processed by whoever runs the proving infrastructure. In traditional ZK systems, you're trusting the prover operator not to look at, log, or sell your sensitive data.

Our Partners Solving This:

Succinct Labs launched Private Proving on their Prover Network, powered by Phala's TEE infrastructure:

- $100M+ daily volumes secured on Hibachi perps exchange

- Witness data encrypted during proof generation in TEE

- Traders' positions and strategies hidden even from prover operators

- First and only ZK proving infrastructure with hardware-level privacy

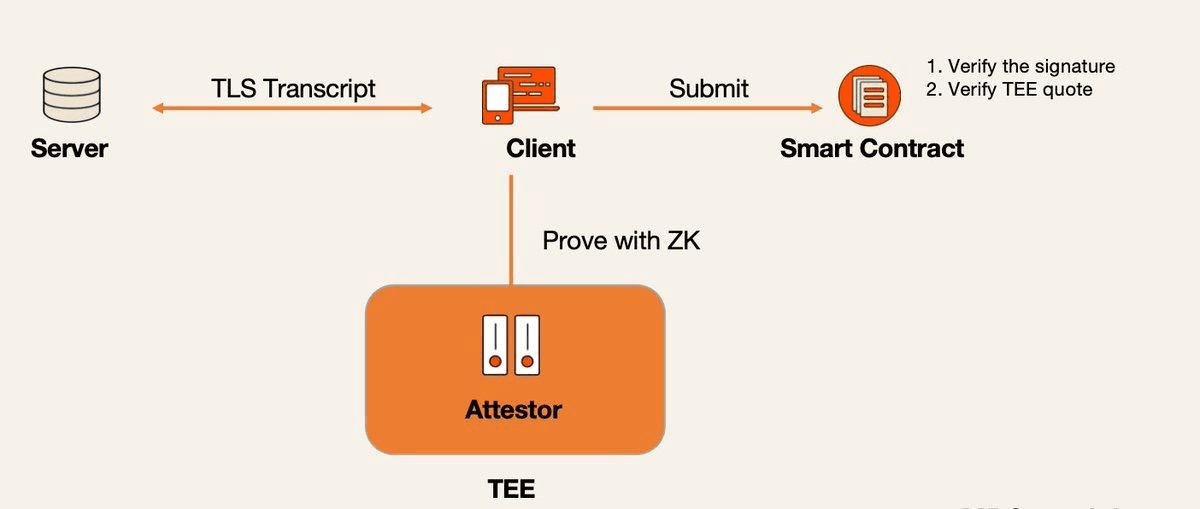

Primus Labs uses TEE to solve the malicious attestor problem in zkTLS:

- zkTLS attestors run inside Phala's TEE using dstack, preventing service providers from interfering with the attestation process

- Even Primus cannot access or manipulate the zkTLS protocol execution — it's cryptographically isolated in hardware

- Smart contracts verify both the attestor's signature AND the TEE quote, proving the zkTLS protocol executed securely

- Eliminates collusion risk: attestors cannot forge proofs or reject valid data requests

Trust Center's Role:

For ZK proving in TEE, the Trust Center verifies:

- Genuine TEE Hardware: Cryptographic proof the prover runs on real Intel TDX / Nvidia CC hardware

- Encrypted Witness Data: Proves witness data stays encrypted in hardware-protected memory during proving

- Code Integrity: The exact ZK prover software (like Succinct's SP1 zkVM) matches the published version

- Public Attestation: Anyone can verify the proving environment isn't compromised

Real Impact:

For the first time, you get privacy AND verifiability. Witness data is protected by hardware isolation, AND you can cryptographically prove that protection is real. No more "trust the prover operator" — just verify the Trust Center report.

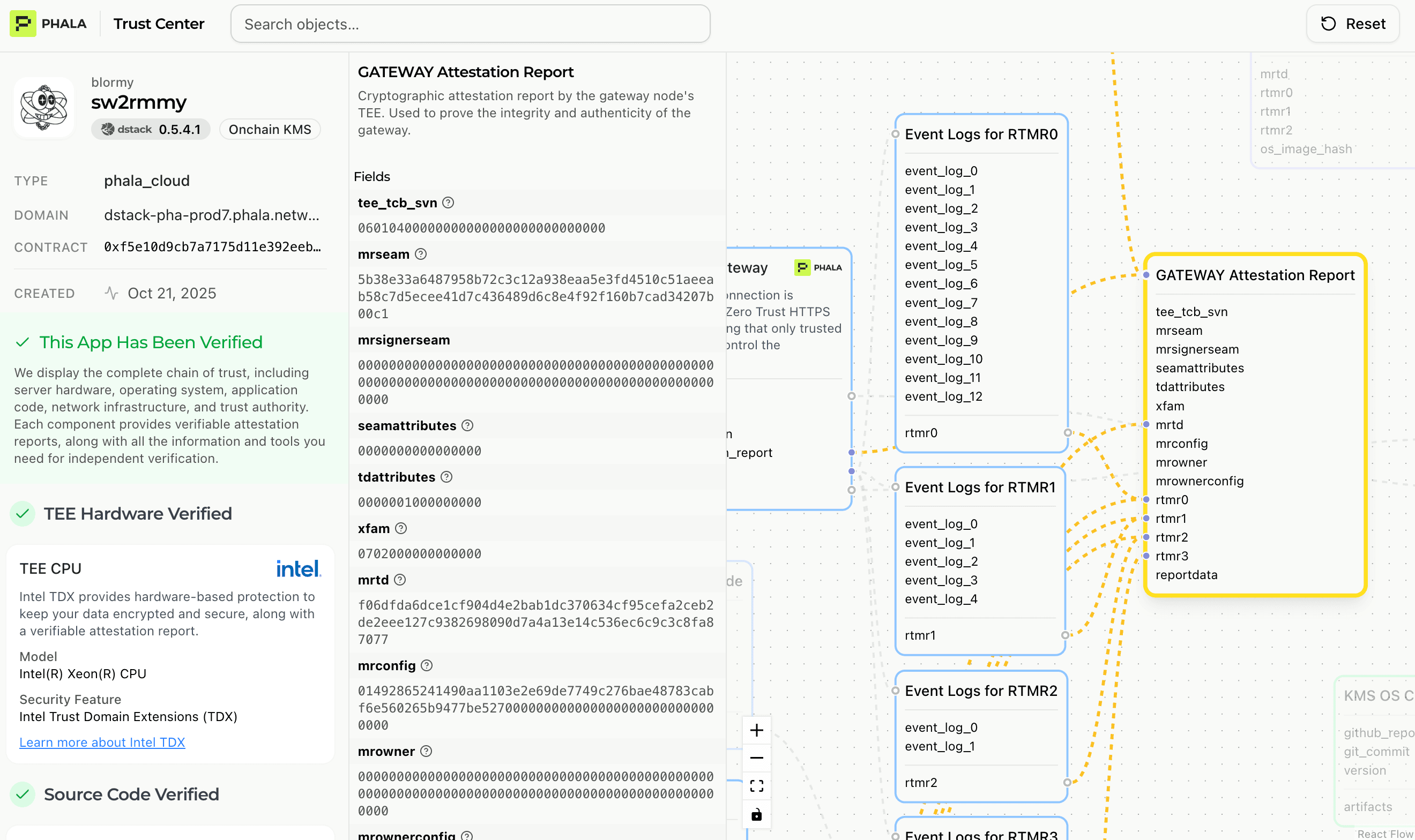

How Trust Center Works (No Cryptography Degree Required)

The Trust Center performs four automated checks that anyone can understand and verify:

Check 1: Real Security Hardware?

Like checking a diamond's authenticity certificate, the Trust Center verifies your app runs on genuine security hardware.

What's checked:

- Cryptographic signature from Intel proving genuine TDX processor

- Cryptographic signature from Nvidia proving genuine Confidential Computing GPU

- Platform certificates validated back to manufacturer root CA

- TCB (Trusted Computing Base) version checked for known vulnerabilities

Why it matters: Ensures you're running on real hardware-isolated environments, not simulated TEEs or compromised systems. The signatures can't be forged — they're generated by the CPU/GPU itself.

How to verify: The report shows green checkmarks when hardware attestation passes. Click to see the full certificate chain and signature details.

Check 2: Exact Code Match?

This is the core verification: proving the deployed application contains exactly the code you intended.

What's checked:

- SHA256 hash of your Docker compose configuration

- Compare with the hash computed from your source code

- Application measurement registers (RTMR3) containing app-id, compose-hash, instance-id

Why it matters: Anyone can recompute the hash from your source code and verify it matches the deployed version. Perfect match = zero hidden modifications.

How to verify yourself:

# Compute the compose hash

sha256sum compose_file.json

# Compare with the hash in Trust Center's "Source Code Verification"

# They must match exactlyNo cryptography expertise needed — just run one command and compare two strings.

Check 3: Protected Encryption Keys?

The most critical security component: verifying that encryption keys for your data and memory are managed securely.

What's checked:

- Key Management Service (KMS) itself runs in TEE (hardware attestation verified)

- Encryption keys are derived from hardware-unique secrets that never leave the TEE

- Keys are bound to specific application measurements — different code = different keys

- Memory encryption keys tied to TDX/SEV-SNP hardware protection

Why it matters: In traditional cloud, encryption keys are stored in software key vaults that cloud providers can access. With TEE-based KMS, keys are cryptographically bound to your specific application running on specific hardware. Even Phala cannot extract these keys — they only exist inside the hardware-protected enclave.

How to verify: The Trust Center shows KMS attestation status and verifies the KMS service itself meets the same hardware and code integrity checks as your application.

Check 4: Secure Network?

For applications exposed via custom domains with dstack-gateway, this verifies zero-trust HTTPS.

What's checked:

- Gateway itself runs in TEE (verified via hardware attestation)

- TLS certificate private keys generated entirely within TEE

- DNS CAA records locked to TEE-controlled certificate authority

- Certificate Transparency logs for public audit trail

Why it matters: Traditional HTTPS terminates at cloud load balancers where providers can intercept traffic. With Phala's TEE-based gateway, even we cannot decrypt your TLS traffic — everything stays encrypted until it reaches your application's TEE.

How to verify: The report shows which domain is verified and links to the public Certificate Transparency logs.

Why Phala is First and Only

The Trust Center isn't just a feature — it's a fundamental architectural difference that no other platform offers.

Automated Public Verification

Competitors' Approach:

- Offer TEE infrastructure, but verification requires manual attestation tools

- Or provide attestation tools, but you need cryptography expertise to use them

- Attestation data is raw and technical — unusable for compliance officers or customers

Trust Center Approach:

- Every deployment automatically gets a verification report

- Zero configuration, no SDK integration, no API calls

- Human-readable report with green checkmarks and plain English explanations

- Public URL you can share with anyone

Public Auditability

Traditional TEE: "Trust us, your app is running in a TEE."

Trust Center: "Here's the public proof. Verify it yourself: [your-report-url]"

This shift from trust to verification is fundamental. You're not asking customers to take your word — you're giving them cryptographic evidence they can independently verify.

See It In Action

Don't take our word for it — verify it yourself.

Live Example Report: https://trust.phala.com/app/7e0817205044eb202a590a0d236ccb4d66140197

Click through each verification section:

- Hardware verification showing Intel TDX attestation

- Source code verification with compose hash

- Operating system integrity measurements

- Network verification for the domain

This is what your customers, auditors, and compliance officers will see when you share your Trust Center report.

Deploy Your First Verifiable App

Getting started takes less than 10 minutes:

- Package your application as a Docker image (you probably already have this)

- Visit Phala Cloud and create an account

- Deploy your application through the web interface

- Receive your Trust Center report automatically at

https://trust.phala.com/app/{your-app-id}

No code changes. No SDK integration. No attestation libraries. Just deploy and verify.

Resources

- Trust Center Platform: https://trust.phala.com

- Phala Cloud: https://cloud.phala.network

- Documentation

- GitHub: https://github.com/Phala-Network/trust-center

Partners Building with Trust Center

- Succinct Labs: Private Proving on Prover Network

- Primus Labs: Compliant Private Computation

- OpenRouter: Verifiable LLMs from Phala

- ERC-8004: Ethereum Agent Standard